Release Notes for v0.7.3

2019-11-27Smallvec

For this release I was experimenting with the smallvec crate. From the crate documentation I took the idea that it might improve cache locality and reduce allocator traffic for workloads that fit within the inline buffer. But so far I didn't really put enough time into measuring if those changes actually improve the performance (or not). I just thought I mention those changes here in the release notes, so they will not be forgotten and maybe later reverted if the performance improvements could not be verified.

Traits vs. Enums

I'm still following the suggestion of @matklad to "replace dynamic dispatch with an enum". I began that process already during the last release (v0.7.2) but this release replaces the following traits by enums:

> git diff ccd28e9 5750001 | grep "\-pub trait"

-pub trait SamplerIntegrator {

-pub trait Sampler: SamplerClone {

-pub trait PixelSampler: Sampler {}

-pub trait GlobalSampler: Sampler {

-pub trait SamplerClone {

So, this is probably the biggest change regarding the documentation because it deals with the really important render loops.

Let's have a look at the C++ counterpart first. Which C++ classes

derive from the base class Integrator? The Rust tool

ripgrep helps us find the

answer:

> rg -tcpp "public Integrator"

integrators/bdpt.h

128:class BDPTIntegrator : public Integrator {

integrators/mlt.h

107:class MLTIntegrator : public Integrator {

integrators/sppm.h

50:class SPPMIntegrator : public Integrator {

core/integrator.h

77:class SamplerIntegrator : public Integrator {

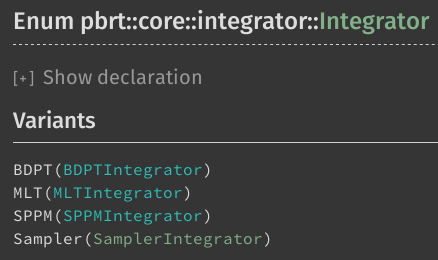

On the Rust side, we will find the same names being used for an

enum Integrator (here a direct

link

to the docs):

In previous versions I used traits and each of this (in C++) derived classes had it's own render method, which was named appropriate to indicate the type of integrator being used.

Now we have the same functionality by using the same name (render)

to call the render loops, and the Integrator delegates the work to

specialized render methods:

impl Integrator {

pub fn render(&mut self, scene: &Scene, num_threads: u8) {

match self {

Integrator::BDPT(integrator) => integrator.render(scene, num_threads),

Integrator::MLT(integrator) => integrator.render(scene, num_threads),

Integrator::SPPM(integrator) => integrator.render(scene, num_threads),

Integrator::Sampler(integrator) => integrator.render(scene, num_threads),

}

}

}

Which means that all the integrators being derived (in C++) from the

SamplerIntegrator base class, do use exactly the same render method

and only differ in the way they handle the methods being called from

within the render loop:

> rg -tcpp "public SamplerIntegrator"

integrators/path.h

49:class PathIntegrator : public SamplerIntegrator {

integrators/whitted.h

49:class WhittedIntegrator : public SamplerIntegrator {

integrators/ao.h

48:class AOIntegrator : public SamplerIntegrator {

integrators/directlighting.h

52:class DirectLightingIntegrator : public SamplerIntegrator {

integrators/volpath.h

49:class VolPathIntegrator : public SamplerIntegrator {

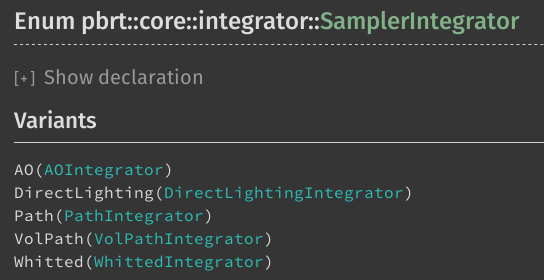

On the Rust side, we will find the same names being used for an

enum SamplerIntegrator (here a direct

link

to the docs):

Below you see basically the same Rust code we have seen before in the first couple of lines:

> rg -trust "pub fn render" src/core/integrator.rs -B 1 -A 7

39-impl Integrator {

40: pub fn render(&mut self, scene: &Scene, num_threads: u8) {

41- match self {

42- Integrator::BDPT(integrator) => integrator.render(scene, num_threads),

43- Integrator::MLT(integrator) => integrator.render(scene, num_threads),

44- Integrator::SPPM(integrator) => integrator.render(scene, num_threads),

45- Integrator::Sampler(integrator) => integrator.render(scene, num_threads),

46- }

47- }

--

67- }

68: pub fn render(&mut self, scene: &Scene, num_threads: u8) {

69- match self {

70- _ => {

71- let film = self.get_camera().get_film();

72- let sample_bounds: Bounds2i = film.get_sample_bounds();

73- self.preprocess(scene);

74- let sample_extent: Vector2i = sample_bounds.diagonal();

75- let tile_size: i32 = 16;

But the part starting at line 68 shows the beginning of the

SamplerIntegrator::render(...) method which also starts with a

match, but does not delegate to different versions for each

SamplerIntegrator. Instead it uses exactly the same code (which can

be seen in full

here).

Blender

Finally let's talk a bit about the Blender

related workflow. The goal is not to have the best integration of

rs-pbrt into the open source software. Blender comes with it's own

renderer, Cycles, and that one is

of course well integrated. The goal is to have:

- A lot of Blender scenes, which can be used for renderer comparisons without having to deal with all the different options, materials, shaders etc. which each individual renderer might provide.

- Some Python scripts which allow to export to individual scene descriptions which are normally specific to each renderer. For materials and shaders some heuristics are used how to translate settings within Blender to the renderer specific stuff.

- To test and stabilize the API of

rs-pbrt(which could finally be separated and given to others in form of a Rust crate) there is an experimental executable calledparse_blend_filewhich allows to render Blender's scene files (extension.blend) directly by picking only bits and pieces from the binary file format, which can be translated directly into API calls forrs-pbrt.

None of this is fully implemented in a production related way, but bits and pieces are working and are constantly adjusted to allow me to do my rendering research. But here are some links where to find Blender scenes and how to get access to the related source code:

- I started to collect Blender and some other simple scenes in various scene formats for different renderers here. Some of the scenes came from Radiance originally, so I had to write Python scripts to parse those files and bring them into Blender, but the source code for that is not really documented nor really used anymore.

- Once I did have scenes in Blender I wrote Python scripts to export

those scenes and the source code is still available

here (mainly the

sub-directory

io_scene_multinowadays - a German article (PDF) about this was published by the Digital Production magazine). - The

parse_blend_filecan be found in theexamplesfolder and is described for example here.

What is new in this release is the support for some of the primitive

shapes (spheres, disks, and cylinders). There are Python

scripts for Blender in the sub-directory

assets/blend/python. Those should assist you in creating those

primitive shapes within Blender and naming them via a naming

convention so that parse_blend_file can detect them and replacing

the meshes (also stored in the .blend files) with proper API calls,

e.g.:

let cylinder = Arc::new(Shape::Clndr(Cylinder::new(

object_to_world,

world_to_object,

false,

radius,

z_min,

z_max,

phi_max,

)));

...

let disk = Arc::new(Shape::Dsk(Disk::new(

object_to_world,

world_to_object,

false,

height,

radius,

inner_radius,

phi_max,

)));

...

let sphere = Arc::new(Shape::Sphr(Sphere::new(

object_to_world,

world_to_object,

false,

radius,

z_min,

z_max,

phi_max,

)));

...

let triangle = Arc::new(Shape::Trngl(Triangle::new(

mesh.object_to_world,

mesh.world_to_object,

mesh.transform_swaps_handedness,

mesh.clone(),

id,

)));

There are some Blender files shipping with the source code:

> ls -1 assets/blend/pbrt-*.blend

assets/blend/pbrt-cylinders_2_79.blend

assets/blend/pbrt-disks_2_79.blend

assets/blend/pbrt-sphere_2_79.blend

assets/blend/pbrt-spheres_2_79.blend

Using those primitives does not make too much sense in regard for modelling the scene geometry, but they are very useful as light emitters. Convince yourself by comparing the rendering times between scenes using those primitives as light emitters vs. modelling them using (many) triangles and using those instead.

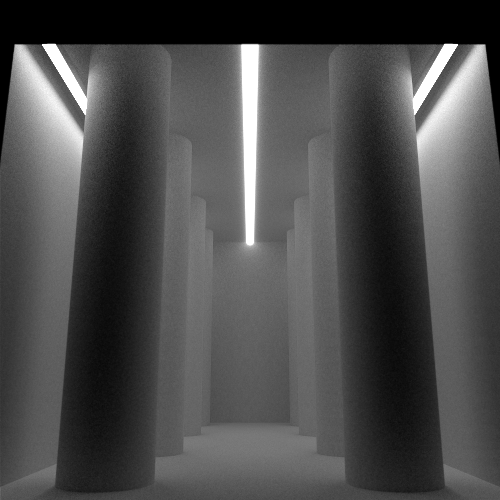

Here an example how to render one of those files using command line arguments to select the integrator being used and adjust some parameters:

> parse_blend_file --integrator bdpt --light_scale 0.1 --samples 256 pbrt-cylinders_2_79.blend

parse_blend_file version 0.7.3 [Detected 8 cores]

BLENDER-v279

677472 bytes read

number of lights = 3

number of primitives = 25

500x500 [100%] = 500x500

integrator = "bdpt" [Bidirectional Path Tracing (BDPT)]

pixelsamples = 256

max_depth = 5

Rendering with 8 thread(s) ...

1024 / 1024 [========================================================================] 100.00 % 1.01/s

Writing image "pbrt.png" with bounds Bounds2 { p_min: Point2 { x: 0, y: 0 }, p_max: Point2 { x: 500, y: 500 } }

And the resulting image:

The End

I hope I didn't forget anything important. Have fun and enjoy the v0.7.3 release.